The Language Processing Group

1st October, 2001

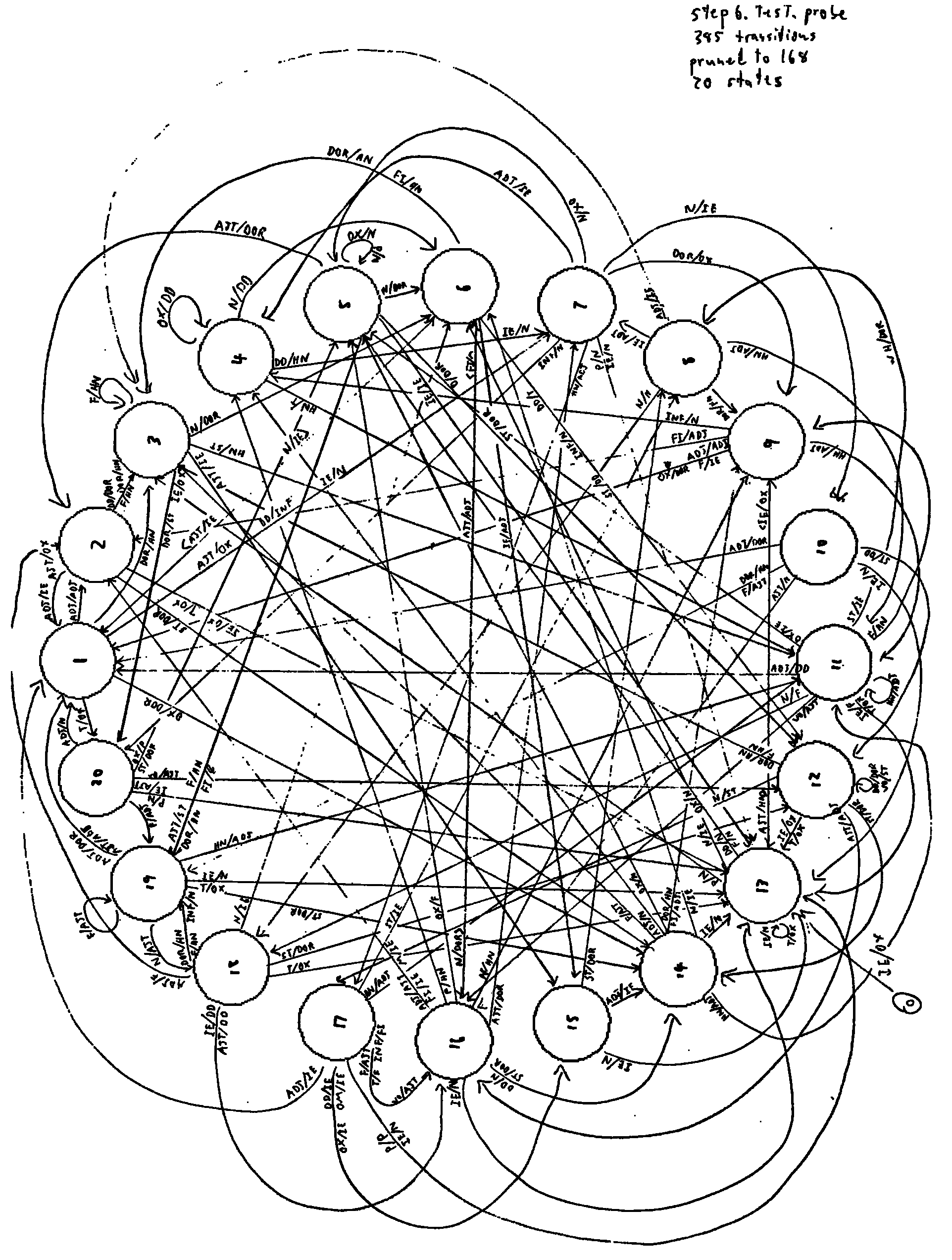

The LPG was a long-running project under the old Machine Learning Research Centre at the Queensland University of Technology, led by Joachim Diederich. Among other avenues in which the whole group was involved, and I investigated training Elman Simple Recurrent Networks on transcribed spoken-language data. We performed soft clustering of the context layer during training, to facilitate the extraction of deterministic Finite State Automata (dFSA) with fewer states and lower prediction error rates.

We investigated using standard spherical clustering techniques, while also developing a hyper-ellipsoidal correlation measure and an algorithm to employ it. The goal was to better identify the elongated clusters we saw forming in state-space. Unfortunately the Machine Learning Research Centre ceased activities before this new technique could be fully realised.

A Technical Report was written to record the research we performed, and is available under Publications.

Software

The software listed here is ©2001 Dylan Muir, except where indicated otherwise. Please feel free to re-use this code, but please acknowledge its origins.

tlearn reverse engineering excerpt

download excerpt

tlearn is a neural network simulator written by Jeff Elman and others. In the course of the Language Processing Group project, I reverse-engineered and modified tlearn to perform on-line clustering while training. This modification is called tlavq (for Adaptive Vector Quantisation).

The code contained in this excerpt from the LPG projectTechnical Report is copyright Jeff Elman and the authors of tlearn. Please see the tlearn software page for information on re-distribution.

dstat

download description

download code

dstat will extract the uni-gram and bi-gram statistics for a data set, based on a {name}.pattern file.

SymStrip

download description

download code

SymStrip takes a transcribed language corpus with the words tagged with their word type (vowel, noun, etc) and separates the tags and words into separate text files. SymStrip can mark superfluous tags and insert reset markers at sentence boundaries.

MakeFSA

download description

download code

MakeFSA constructs a Finite State Automaton (FSA) definition from the probed hidden unit activations from a recurrent Elman network simulated with tlearn. A cluster analysis program is required to extract the locations of the FSA's states within the hidden unit space. The resulting clusters are loaded into MakeFSA. Transition tables are generated for the hidden unit activation data, and used to construct a deterministic FSA.

pattern

download description

download code

pattern generates .pattern files from tlearn .teach and .data files. .pattern files are used in several other applications, such as dstat and MakeFSA.

vector

download description

download code

vector takes a data set of known tags and sentence boundaries, and writes the corresponding tlearn .teach and .data files. The output can be written in both localist and distributed representations, and the input and output lines can be either binary or normalised together.

tlbe

download description

download code

tlbe stands for tlearn bit error. It can extract the true error (not the averaged error generated by tlearn) for a distributed output and target. It will give the number of incorrect predictions over a tlearn run for a one-step-lookahead task.

sclust

download description

download code

sclust performs adaptive spherical cluster analysis on a data set. The data can be of any dimensionality. sclust uses a modified adaptive Forgy's algorithm, and is deterministic (i.e. the analysis only needs to be performed once, and will always return the best result for the algorithm used).

o2clust

download description

download code

o2clust performs cluster analysis on a set of data. Although the correlation-matrix cluster representation implemented in the corrmatrix module is complete, the algorithm does not work at present. The problem seems to lie with forming clusters that have too few points, and therefore are unnaturally biased along an arbitrary axis. When forming clusters by adding points (as opposed to splitting larger clusters into progressively smaller clusters) the clusters grow from a few points to encompass (hopefully) a natural cluster. However, when a cluster contains one or two points, the correlation matrix is either singular or very close, and the resulting "shape"; of the cluster is merely the axis through the two points. This severe skew persists until a greater number of points are used to form the matrix.

For more information, read the Technical Report.

tlavq

download description

download code

tlavq is an extended implementation of tlearn. tlavq performs learning on a user-defined neural network, much in the same way as tlearn, except that tlavq can also perform on-line cluster analysis of specified neurons, with the intention of using this analysis to either partially or wholly classify the specified neuron's activations into another set of nodes.

The purpose of this was to implement the online clustering architecture outlined in Das and Moser [1998], but tlavq retains tlearn's inherent flexibility. The network can be configured to any architecture possible in tlearn, and clustering can be turned off entirely. With this feature disabled, the program behaves identically to tlearn.

The source code also serves as an example to aid in the further extension of tlearn.