Brains and Machines podcast

17th May, 2024

I had the privilege of speaking with Sunny Bains on the Brains and Machines podcast. Listen in to learn about SynSense low-power sensory processors, and Neuromorphics is 2024.

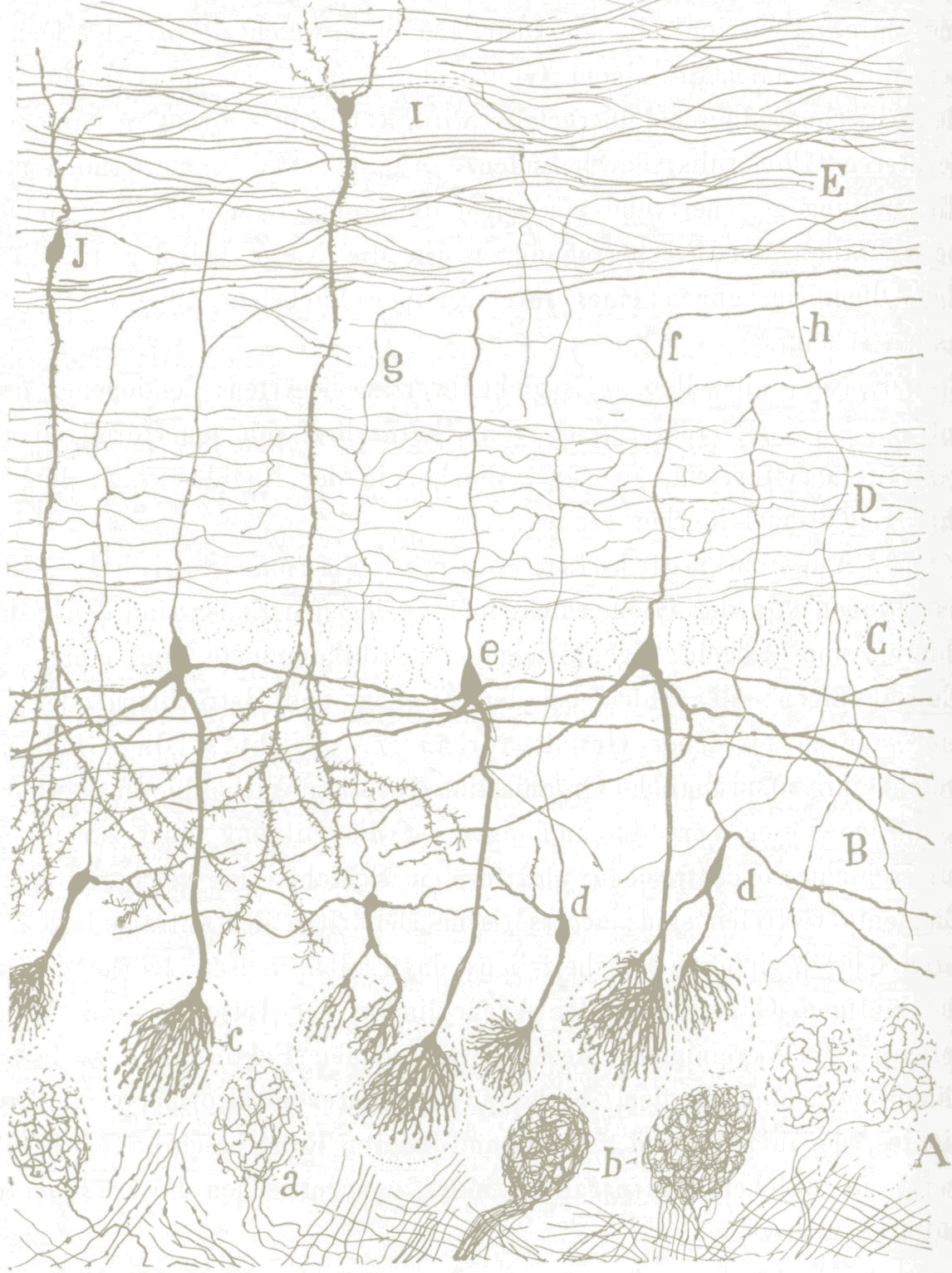

Histology of the Nervous System of Man and Vertebrates, Ramón y Cajal (1995)

Histology of the Nervous System of Man and Vertebrates, Ramón y Cajal (1995)

I had the privilege of speaking with Sunny Bains on the Brains and Machines podcast. Listen in to learn about SynSense low-power sensory processors, and Neuromorphics is 2024.

I had the privilege of speaking with Sunny Bains on the Brains and Machines podcast. Listen in to learn about SynSense low-power sensory processors, and Neuromorphics is 2024.

LIF neuron model

LIF neuron model

AI is extremely power-hungry. But brain-inspired computing units can perform machine learning inference at sub-milliWatt power. Learn about low-power computing architectures from SynSense, which use quantised spiking neurons for ML inference. I presented this slide deck at the UWA Computer Science seminar in Perth.