The Cheap Vision project

FPGA-based vision processing

10th January, 2004

Supervised by and , I designed an FPGA-based vision processing system. The processor implements massively-parallel feature extraction algorithms, as well as traditional sequential algorithms. The key design goal is computationally inexpensive vision. The processor can output a user-definable stream of extracted features, encoded in a flexible format. This is in contrast to traditional massively-parallel architectures such as silicon retinas, which perform processing but do not significantly reduce the required communications bandwidth. This system also eliminates the need for off-board DSP hardware as well as image stream encoding.

Overview

This project will implement a small, cheap, powerful and flexible vision processor, for use in on-board vision systems. The standard approach for vision processing is to use a stationary camera coupled with some powerful digital signal processing (DSP) hardware. This hardware is expensive and usually too bulky to be practical in an on-board application. Some systems such as the EyeBot have incorporated cheap ‘internet camera’ products into small autonomous robots. These systems rely on the system CPU to handle all aspects of image acquisition as well as processing and control. Silicon retinas and other analogue vision chips have been used to implement parallelised image processing algorithms with much success.

The ideal vision sensing tool would perform on-board image acquisition and feature extraction, with the majority of the image processing done independently of the system CPU. Our design will incorporate an image sensing device with its output fed directly into an FPGA. This will enable real-time acquisition of the image, with no intervening encoding or modulation stages required. The features or processed images will be output onto a bus using a standardised communication protocol.

The resulting processor will be small enough to be incorporated into small robotic and vision systems. The processor will be a feasible replacement for other, more standard sensors such as infra-red and ultrasonic spectrum sensors.

Project Details

Introduction

This project will implement a small, cheap, powerful and flexible vision processor, for use in on-board vision systems. The processor will be based on recent technological advances and computationally inexpensive parallel vision processing algorithms.

DSP techniques

The standard approach for vision processing is to use a stationary camera coupled with some powerful digital signal processing (DSP) hardware. Most vision processing is done off-board, using powerful PC solutions. On-board processing power in autonomous systems is usually not good enough to enable vision processing, as well as control of actuators. Off-board DSP systems such as in Figure 1 require a camera, encoding and decoding of the image, wireless transmission and a frame grabber housed in a PC with DSP capabilities. This hardware is expensive and, of course, too bulky to be practical in an on-board application.

The Imputer

The development of the Imputer was an attempt to create a flexible, modular image processing device. It consisted of a single system bus, onto which components such as CPU cards, frame grabber cards and I/O cards could be added. The configuration of these cards was limited; only one CPU per system was allowed, although multiple frame grabbers were supported. The architecture of the system was fundamentally monolithic; the single von-Neumann processor was used to perform all the image processing.

Additionally, the Imputer was housed in an external case and so could not be used in an on-board autonomous system for miniature robotics. The Imputer is no longer being manufactured.

Current On-board Autonomous Systems

Some systems such as the EyeBot have incorporated cheap 'internet camera' products into small autonomous robots. These systems rely on the system CPU to handle all aspects of image acquisition as well as processing and control, as shown in Figure 2. Because of the high bandwidth requirements of real-time video, a compromise is usually made either in terms of frame rate or in terms of resolution. The EyeBot team have recently produced their own (low-resolution) camera for use with their robot controller, which is capable of acquiring full-colour images at a rate of 3.7 frames per second with a 25Mhz CPU clock.

Analog Vision Chips

Silicon retinas & other analog vision chips have been used to implement parallelised image processing algorithms with much success. These chips are usually not very flexible, since they implement a fixed set of algorithms in hardware, which cannot be modified. They perform image acquisition and processing on the one die, and therefore do not require the power of a fully-fledged DSP system. Because the processing is done locally, they leave the system CPU free to perform other tasks.

The ideal vision sensing tool would perform on-board image acquisition and feature extraction, with the majority of the image processing done independently of the system CPU. The tool would be cheap both to manufacture and purchase, using mostly off-the-shelf components. The processing algorithms used and the output of the tool would be extremely flexible and customisable. The tool would enable powerful real-time control based on real-time feature extraction.

Background

CCDs and Image Sensors

Image acquisition requires a light-sensitive structure to detect the image. Charge-coupled devices, or CCDs, have been the most popular choice for many years. The silicon substrate of the chip is sensitive to incident photons, and generates a charge corresponding to the amount of light falling upon the sensitive area. This charge is transferred sequentially off the device, the voltages are measured at the edge of the chip and are converted to digital via an Analogue to Digital Converter (ADC). CCDs require intricate clocking arrangements, off-chip ADCs and high-voltage supplies.

The recent availability of entire 'image sensors' on a chip means smaller footprints and low-voltage applications are now possible. An image sensor incorporates a CCD, parallel clock drivers, voltage regulators and a fast ADC into a single chip. The image is read sequentially as a digital signal. These chips are low-voltage, require much more simple clock driver circuits and have a footprint only slightly larger than a CCD alone.

Colour images are obtained by placing a mask of silicon embedded with colour dyes above an ordinary CCD. This results in an array of pixels that are sensitive to blue, green and red light. Adjacent pixels are interpolated on-chip to give a colour image. Because of this interpolation there is a loss of colour resolution, but this is perhaps comparable to the human retina, which provides high-resolution contrast data, but only low-resolution colour.

Kodak provides a range of image sensors and CCDs with varying resolutions and frame rates.

| Resolution | Chip type | Frame rate |

| 1280×1024 | Image sensor | 14fps at 18Mhz clock |

| 640×480 | Image sensor | 30fps at 10Mhz clock |

| 2048×2048 | Interline CCD | 20fps at 20Mhz clock |

| 640×480 | Interline CCD | 85fps at 20Mhz clock |

Resolutions of up to 4096×4096 are available, although with correspondingly reduced frame rates. These higher resolution chips may not be suitable for real-time control.

FPGAs

To provide acquisition control and processing, we envisage using Field-Programmable Gate Arrays (FPGAs), which are essentially fully-customisable hardware. FPGAs can be programmed via logic circuit schematics or through VHDL (a hardware description language). FPGAs are capable of encapsulating an entire traditional von-Neumann microprocessor, or alternatively, of implementing massively-parallel processing structures. Because their hardware is re-configurable, parallel algorithms execute much faster than on a typical fetch-and-execute architecture. They are re-programmable, and can be modified without being removed from the circuit board.

In this project, FPGAs will be used to control the vision input device (including exposure and frame acquisition) without needing separate frame-grabber hardware. FPGAs will also be used for filtering and processing the acquired image, as well as handling communication with other system components.

Project Description

The project will involve the design and implementation of a small, cheap, flexible and powerful real-time image processor, able to be used in a diverse range of imaging applications. Part of the project will be to identify and implement a range of useful image filters and feature extraction methods. The image processor will output feature information onto a standardised communication bus. The bus will be flexible enough that these features can be used directly for real-time control, or can be processed further for other applications.

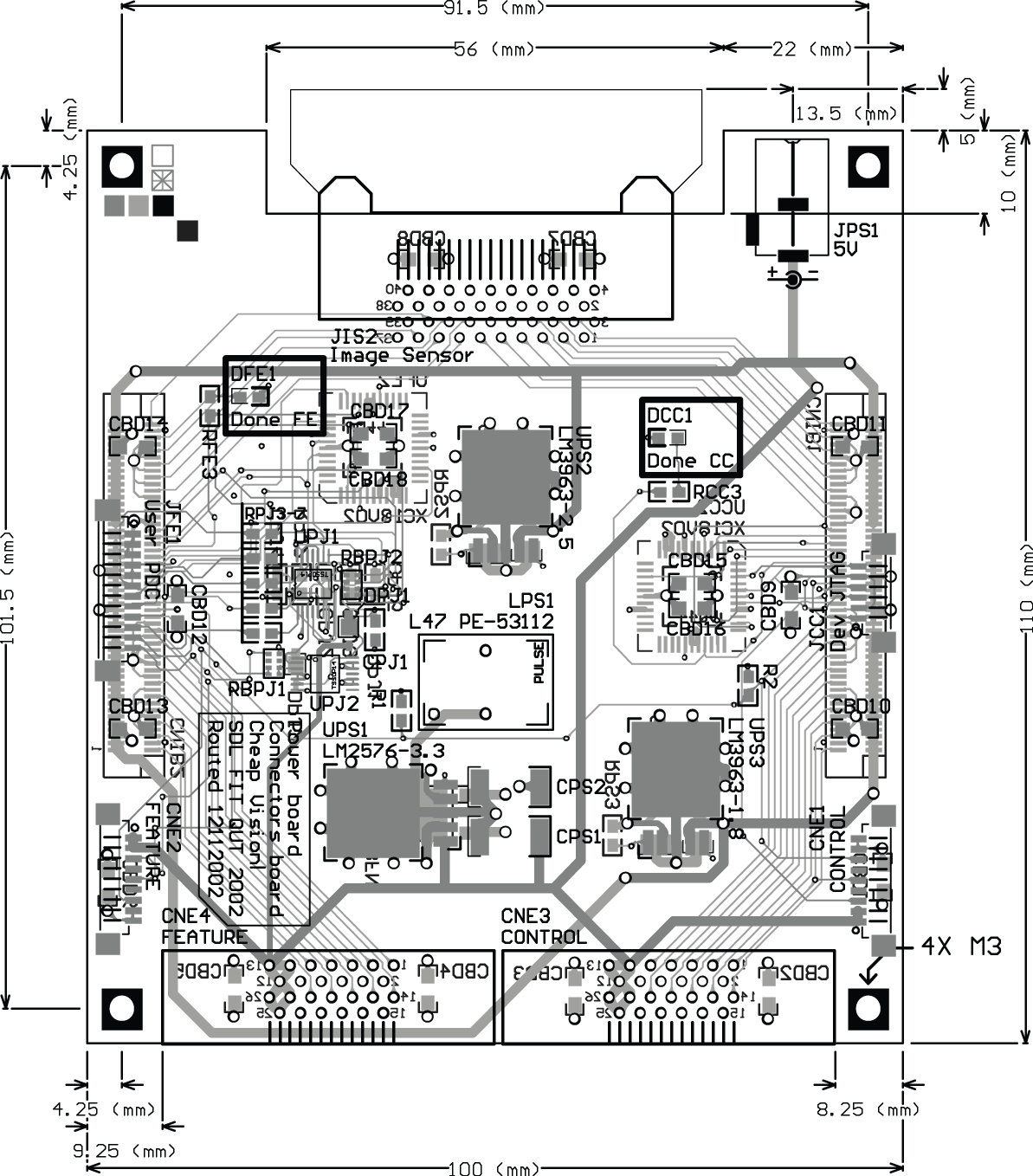

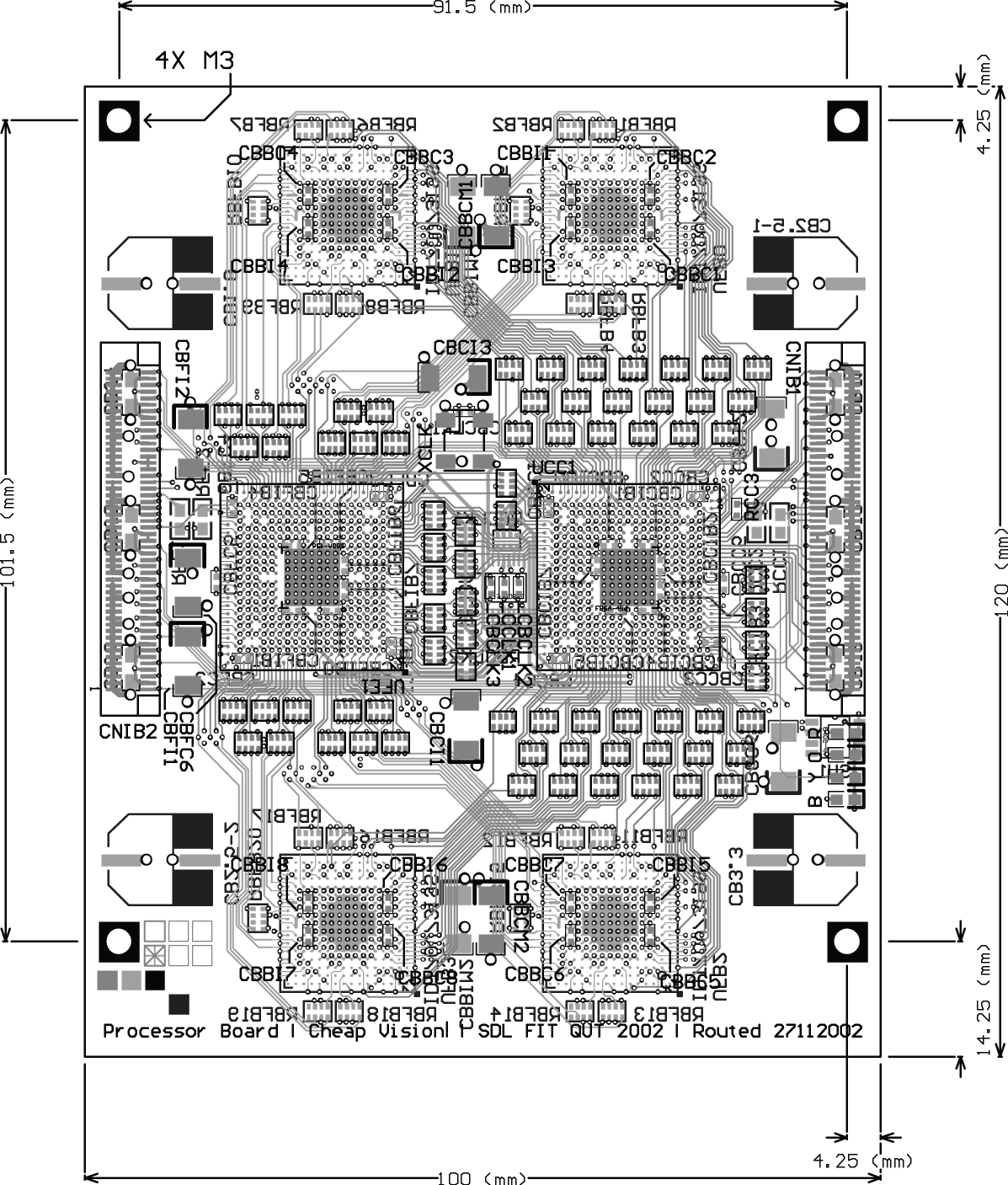

The design will incorporate an image sensing device with its output fed directly into an FPGA. This will enable real-time acquisition of the image, with no intervening encoding or modulation stages required, as shown in Figure 3. The FPGA will perform various filtering and feature extraction operations, such as region segmentation, blob and edge detection, noise reduction, optical flow, etc. The implementation of these algorithms will be as parallel as possible, to make full use of the concurrency available in the FPGA. The features or processed images will be output onto a bus using a standardised communication protocol.

The resulting processor will be small enough to be incorporated into small robotic and vision systems. Anticipated applications include on-board vision sensors for mobile robotics, intelligent vision processors for visual inspection and control, smart security and identification systems, and a host of other applications. The processor will be a feasible replacement for other, more standard sensors such as infra-red and ultrasonic spectrum sensors.

In other words, this processor will elevate vision into an off-the-shelf sensor option; as easy to incorporate into applied systems as any other basic component.

Objectives

The primary objective of the vision processor is that is will be able to perform real-time filtering and feature extraction, such that the output of the processor can be used in a real-time control system. This also means that the communications interface between the processor and external components should be simple but comprehensive.

The filters and feature extraction algorithms will be able to be combined in a flexible manner, such that the output of the processor is useful for control and identification applications. Additionally, the lens used for acquisition will be changeable, to accommodate different applications.

The processor will use off-the-shelf components as much as possible (excepting the image sensor and FPGAs) to keep the total cost of producing the system to a minimum.

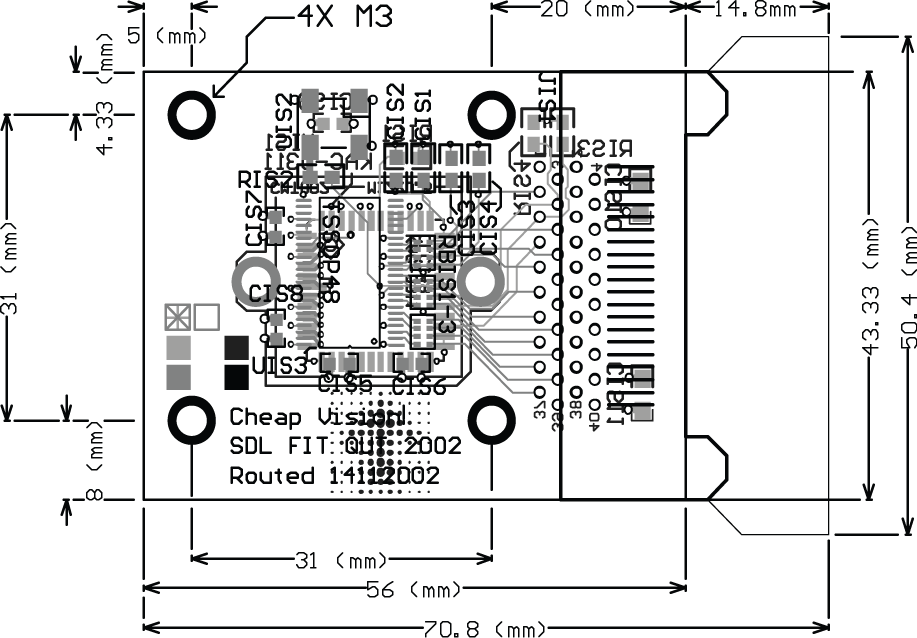

The processor will be as compact as possible, to ensure it is useable on-board in small applications. The QUTy robot, developed in the Smart Devices Lab at QUT, is approximately 10 centimetres wide. The vision processor will have a similar form factor.

Research team

Researchers

Associate Professor Joaquin Sitte

Dr Shlomo Geva

Research Assistant

Dylan Muir

Publications

This work was presented at AMiRE 2003: DR Muir, J Sitte. 2003. Seeing cheaply: flexible vision for small devices. Proceedings of the 2nd International Symposium on Autonomous Minirobots for Research and Edutainment (AMiRE 2003), February 2003, Brisbane, Australia. PDF.